After setting up the Hub Server and configuring Veritone settings on it, we also need to configure detailed Veritone AI parameters in Zoom using the Web Management Console. We need to set Veritone as the AI Provider, provide configurations for AI engines, and then map AI rules to AI engines. Follow these steps:

AI Providers

You need to add one or more AI providers to work with Zoom. Follow these steps to add a provider:

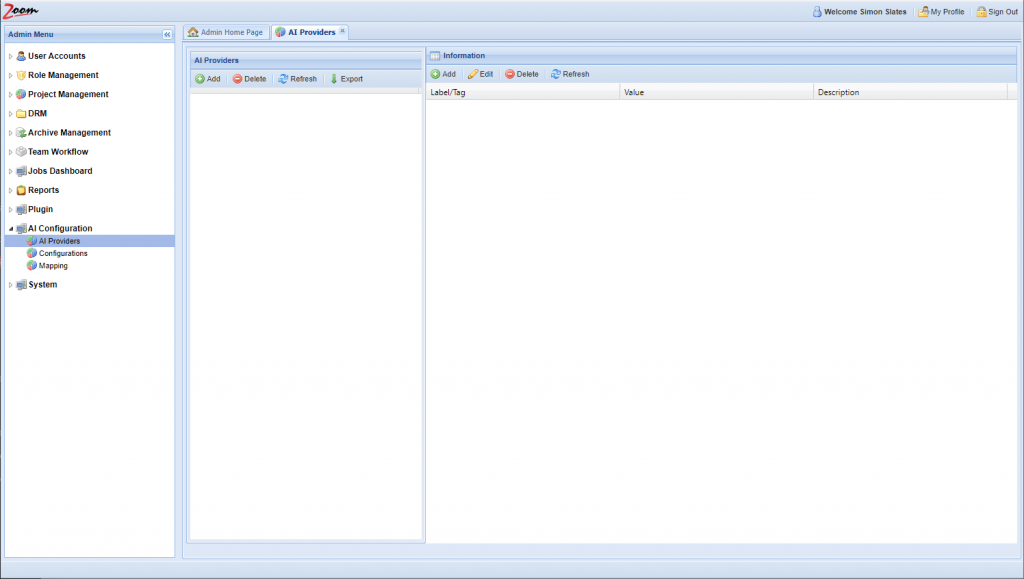

- Log in to the Web Management Console and click AI Providers under the AI Configuration node in the Admin Menu sidebar. You need to add an AI Provider here.

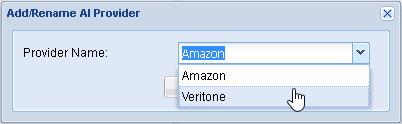

- Inside the AI Providers panel, click Add and choose Veritone from the list of AI Providers.

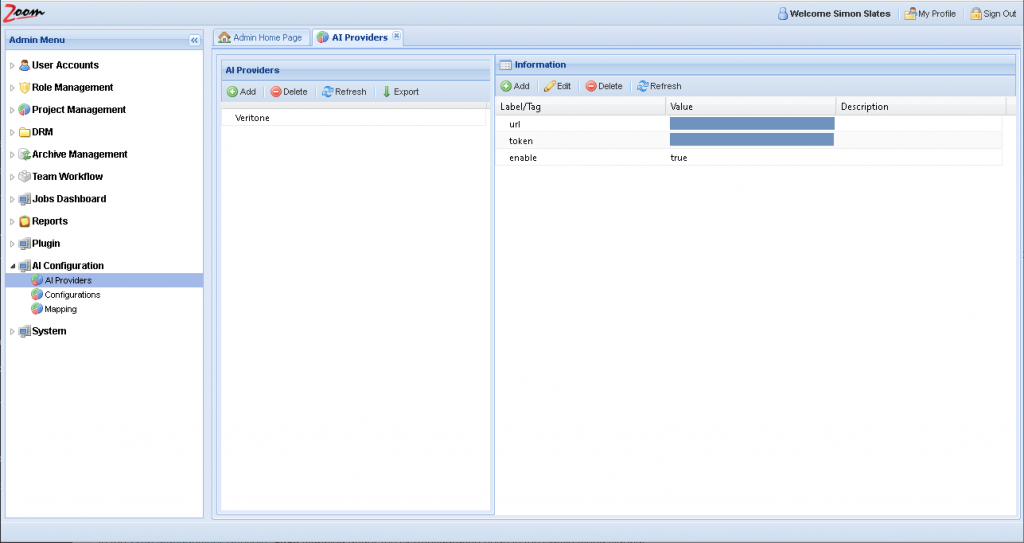

- Select the newly added row for Veritone. Its details will be loaded in the right side Information panel.

- Add/update these values in the Information Panel:

- url – enter the URL to access Veritone services. It should be the exact value given by Veritone. For example, https://uk.api.veritone.com/v3/graphql

- token – specify the API token which will be used for authentication with Veritone. Without the token, Zoom can not authenticate to access AI services. The Veritone API token is generated from Veritone’s user interface. For more details, check Veritone’s documentation at https://docs.veritone.com/#/apis/authentication.

- enable – this is true by default to enable this provider. Setting this value to false will disable this provider in Zoom, so it will not be used further. If this value is missing in the configuration files, it will still be treated as true.

The values specified here are all case-sensitive.For other providers, different sets of values may be needed.

- After updating the provider values, move to configure this provider on the AI Configurations page in the next section.

AI Configurations

You need to add one configuration per AI Engine provided by your AI Provider. You would get the Engine ID and type from them for each AI engine. Follow these steps to add the necessary configurations:

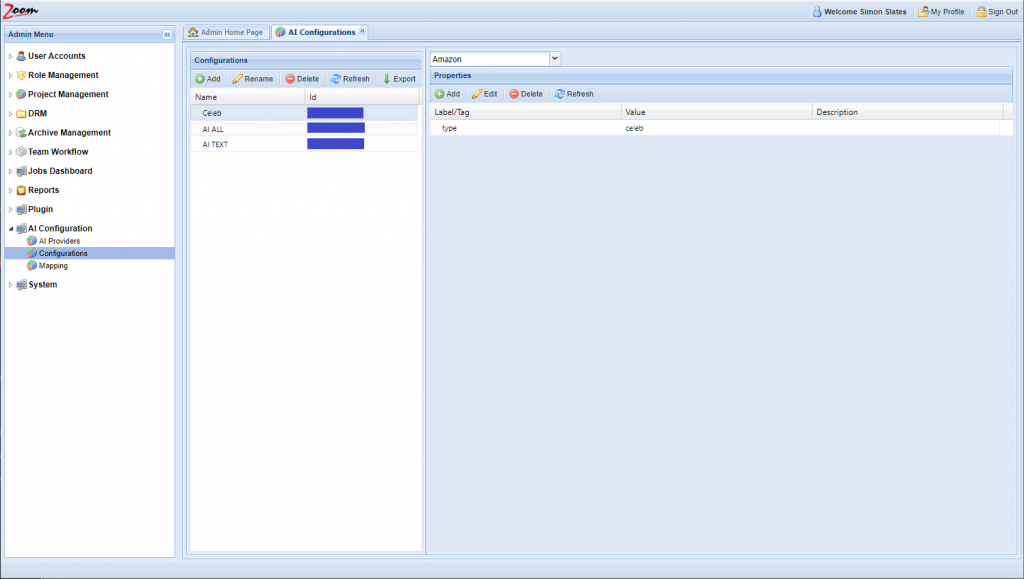

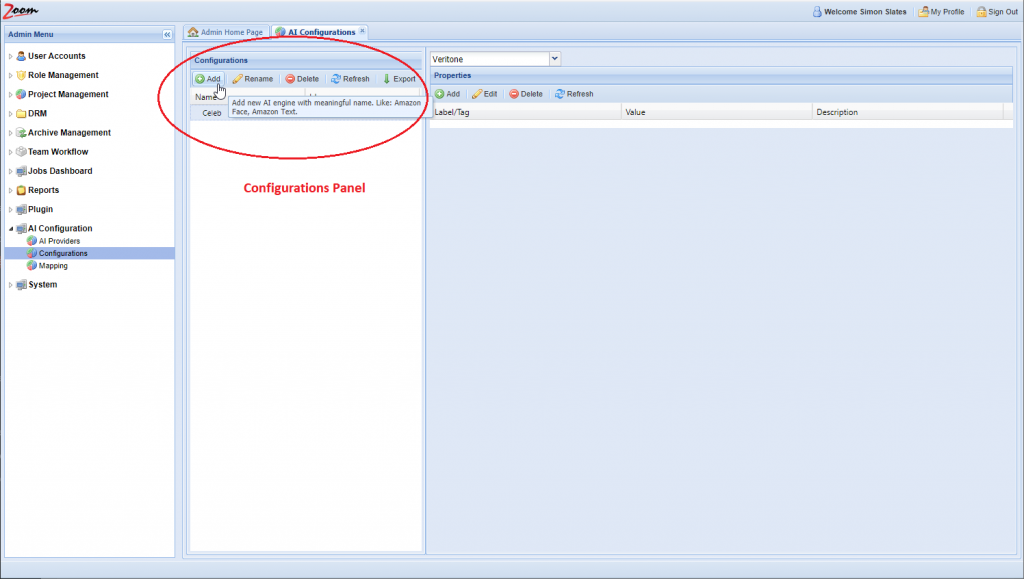

- In the Web Management Console, click Configurations under the AI Configuration node in the Admin Menu sidebar. You need to add AI configurations here.

- Inside the Configurations panel, click Add to add a new configuration.

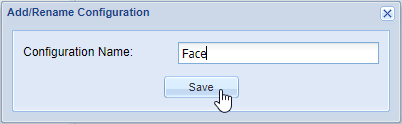

- Provide a name for the new configuration. It is best to use names relating to the task performed by the engine and the names should be unique.

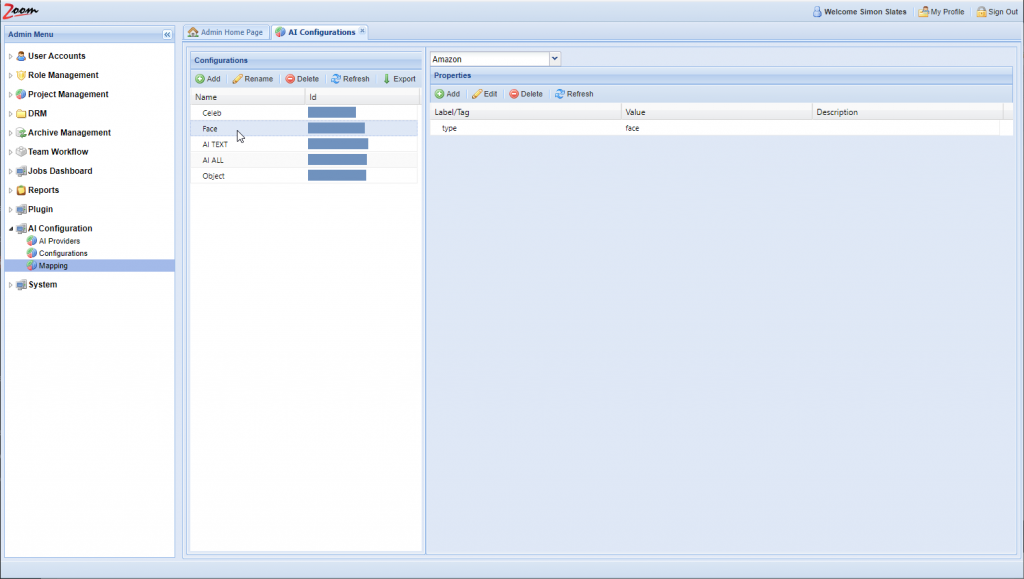

- Select the newly added row for the new configuration. Its details will be loaded in the right side Properties panel.

- Choose an AI provider from the AI Providers dropdown box above the Properties panel. Choose the provider, Veritone, that we had added in the previous section.

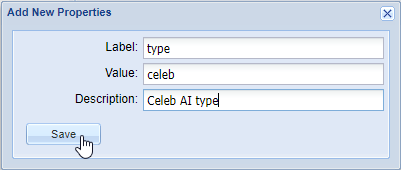

- Click Add in the Properties panel for each property that you want to add for the new AI Configuration.

- You need to fill Label and Value for each property at the minimum. The Description is optional.

For example, for the AI Type property, you should add the Label as type and Value as its AI type. So, Label will be type and Value may be transcription.

For example, for the AI Type property, you should add the Label as type and Value as its AI type. So, Label will be type and Value may be transcription. - Each configuration that you add should have exactly one AI Engine and one AI Type property in it. Some configurations will also need additional properties depending on the AI Engine that is being configured. Each group of AI Engine and AI Type together should be exclusively configured in one configuration only, and these cannot be repeated. Add these properties for the new configuration:

Label Value type Add the AI type that should be associated with this configuration. This is the AI Engine’s AI Type that is provided by Veritone. veritoneEngineId Add the engine ID that will be associated with this configuration. It should be the exact same value as provided by Veritone. payload:libraryId Specify the Library ID if it is required by your AI engine. The given library will be passed as an argument to the engine. Libraries are used at Veritone to train engines to look for specific faces, celebrities, logos, or objects to identify in the footage. payload:<key> Specify this property if there is a need to pass extra arguments to the engine. To create an argument for an engine, prefix it with payload: (payload + colon). For example, to pass a debug=true argument to the engine, the Label should be entered as payload:debug. The value should be true.enable Optionally, set this property to false to disable this AI Engine. The Engine will be disabled and no further jobs will be created using this AI Engine. Similarly, setting ‘enable’ as false for an AI Provider will disable that provider and all the engines associated with it.minConfidence Specify the confidence level in the range from 0.00 to 1.00. Only data that has a confidence level equal or higher will be added to the Zoom database. The rest will be discarded. The default value of 0 is used when this property is not specified. minimumValueLength Specify the minimum length of value to save with Zoom. Any value which has a lesser number of characters than this value will be ignored. The default value is 0, meaning all values from Veritone AI engines are pushed and saved with the Zoom server. This default is used when no value is set for this property. maxJoinWords Specify this property to join AI data sent by the AI Engine. Sometimes, AI data is too granular, such as with transcription engines returning one word every few milliseconds. Using this property, we can join the AI data every few words. This value has to be greater than one to have any effect. maxJoinWordsRange Similar to maxJoinWords, specify this property to join AI data based on the number of seconds elapsed. Its value has to be greater than one to have any effect. type and veritoneEngineId should be specified for each AI Engine.payload:libraryId and payload:<key> are also mandatory when extra values need to be passed to the AI Engine.

enable, minConfidence, minimumValueLength, maxJoinWords, and maxJoinWordsRange are optional. All property values specified are case-sensitive.maxJoinWords or maxJoinWordsRange work for any AI engine, not just transcription. - After updating the configurations for the AI engines inside your AI provider, move to map the engines with configurations on the AI-Mappings page in the next section.

AI-Mapping

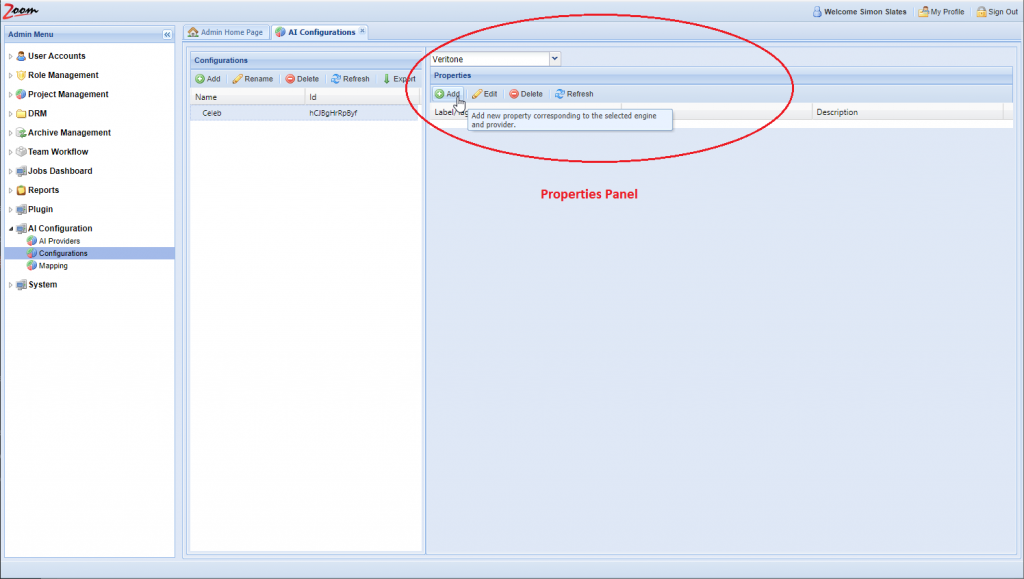

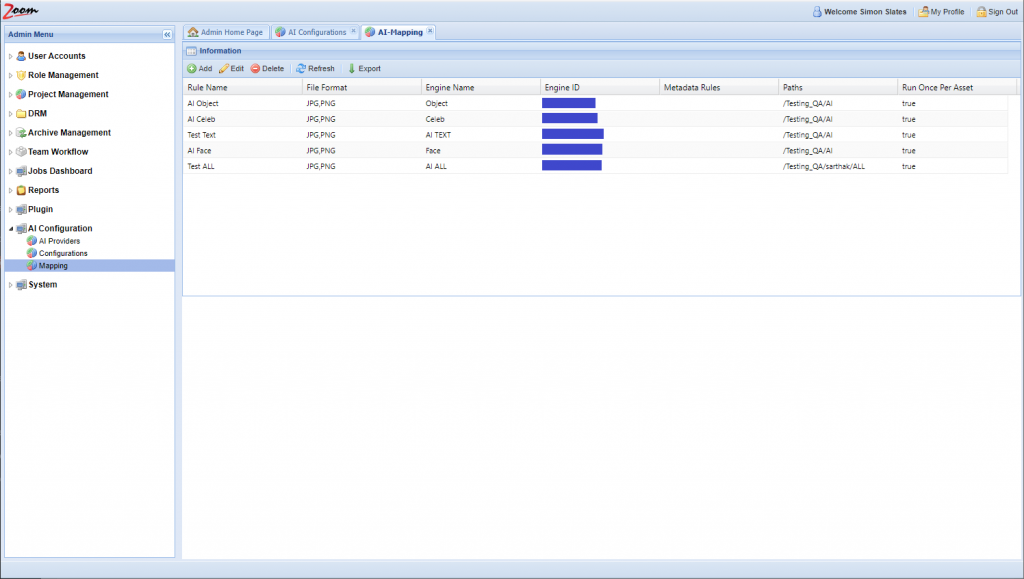

Finally, you need to map AI engines with Zoom, to control what assets will be analyzed by each engine. Follow these steps to map asset rules to an engine:

- In the Web Management Console, click Mapping under the AI Configuration node in the Admin Menu sidebar.

- Click Add to add a new mapping rule.

- Specify these values:

- Rule Name: specify a name for the mapping rule.

- File Format: specify a comma-separated list of file extensions associated with this mapping. For e.g.: MP4, MXF. Only files matching the given extension(s) will be sent for analysis to the AI Engine. If not specified, then all file types are considered valid for this mapping.

- Engine: choose an AI engine for this mapping. Each mapping needs an engine. The associated engine will be used to extract the AI data as per the rules specified in this mapping.

- Metadata Rule: specify metadata key-value pairs to be matched for selecting a file. Multiple metadata property value pairs can be specified in this field, separated by commas only (no spaces). Only those assets that have these values for the specified properties will be sent for AI analysis.

Values can be specified like these examples:

- IPTC_City=Milan (Only assets with city value Milan will be selected for this engine).

- IPTC_COUNTRY=Italy,AND,IPTC_Season=2019 (Assets with the country Italy and Season value 2019 will be selected for this engine)Multiple metadata values should be separated by commas. AND/OR operators are also supported.Value comparison for metadata properties is case insensitive, which means Zoom Server will match Japan with japan.When nothing is specified for this field, all assets are selected.

- Paths: specify the path to match assets from. Only files present in this path are selected for AI analysis.

- Run Once Per Asset: if this is enabled then an asset is analyzed with AI only once throughout its lifetime, even after it is moved, renamed, or a new revision is checked in. Rule name and Engine cannot be empty. Any other field left blank will match everything. For example, leaving Paths blank will match all paths on the Zoom Server.

- Click Save to save the mapping rule.

Veritone’s AI configuration in Zoom is now complete.

AI Types supported in Zoom

Zoom supports a fixed number of AI types. AI type is the way data is matched by the AI engine. These AI types are provided by your AI provider. These are later configured in Zoom as shown in the AI Configurations section above. The following AI types are supported in Zoom:

- face – denotes and categorizes AI data related to human faces. This data has various information related to faces, such as age, emotions, race, etc.

- object – identifies and names objects and items in the real world, like glasses, bicycles, crayons, ties, suits, etc.

- text – denotes extracted text (like OCR) from images or videos.

- celeb – denotes well-known celebrities and is attached to their name.

- transcription – this is used when speech (audio) is transcribed from an audio or video file.

You should check with your AI provider about the AI types provided with their AI engines.